#GenealogyOfCode

#Binary #Computing #NumeralSystem #Babel

Computation clearly does not begin with personal computers and their direct ancestors from the twentieth century. To find the roots of the principles upon which computation of today is based on one has to go back at least to the Middle Ages.

Ramon Llull (1232–1316), a Majorcan thinker, sought to develop a system for solving basic theological and philosophical questions, a method by means of which he tried to find and explore all possible combinations of concepts with the help of dynamic charts. This procedure, his so-called Ars magna, is explained most extensively in his notable work »Ars magna« [Great Art] (1274–1308). [1] Gottfried Wilhelm Leibniz (1646–1716) conceived his »Dissertatio de arte combinatoria« [Dissertation on the Art of Combinatorics] (1666), in which he proposes a parallelism between logic and metaphysics inspired by Llull. [2]

In 1679 Leibniz wrote about a #Binary system (»dyadic« or »binaria arithmetica«) in one of his unpublished letters, which uses only 0 and 1 as numbers. He was not sure about the practical use of his invention, but frequently wrote about its possibilities in various letters to his colleagues. In 1701 he claimed to a French mathematician that he imagined to foresee, that by this means and the endless rows there is something to achieve, which wouldn’t be easy in another way.

Leibniz described the first computing device (#Computing) that works with the binary system as early as 1679. The description remained unpublished and the machine was not built in his lifetime. [3]

Two centuries later Charles Babbage was working on his Difference Engine, followed by the Analytical Engine, neither of which were constructed entirely under his guidance due to insufficient funding. The Analytical Engine would have been the first general purpose computer, but still a mechanical one. »The bounds of ›arithmetic‹ were, however, outstepped the moment the idea of applying the cards had occurred; and the Analytical Engine does not occupy common ground with mere ›calculating machines‹« [4], wrote Ada Lovelace (1815–1852), acknowledged today as the first programmer, in her notes on Babbage’s computing automaton. This early device operated with a decimal #NumeralSystem. Computers nowadays are based only on a binary numeral system, first used by Leibniz, then reintroduced by George Boole (1815–1864).

Boole first cast logic into algebraic form in his book »The Mathematical Analysis of Logic« (1847), introducing the Boolean algebra. [5] Boole’s binary system is based on the three most basic operations used as logical operations: AND, OR, and NOT. [6]

This system was not put into operation until »Claude Shannon, in 1937, proved in what is probably the most consequential M.A. thesis ever written, that simple telegraph switching relays can implement, by means of their different interconnections, the whole Boolean algebra.« [7]

Also in 1937, Alan Turing (1912–1954) built a Boolean logic multiplier and proposed a theory of computability in his essay on the »Entscheidungsproblem« [decision problem]. [8] The paper had already been written the previous year while he was working on his well-known Turing machine, which was not a physically existing computer, but a mathematical model of computation. With his multiplier based on Boolean logic, Turing tried »to embody the logical design of a Turing machine in a network of relay-operated switches,« [9] which served as a basis for creating the multiplier.

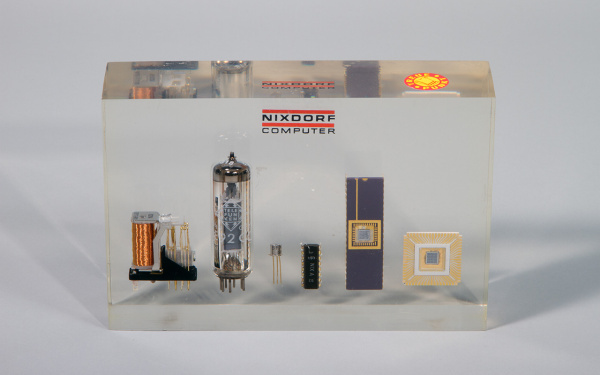

Soon after, from the 1940s with the appearance of electronically powered computers, different programming languages were designed and assembler (asm) was one of the first. This low-level programming language can be converted into executable machine code in one step, as there is a very strong correspondence between the language and the machine code. From the 1950s onward high-level programming languages started to replace their »low« antecedents. Dozens of programming languages have been written and developed, starting with ALGOL (ALGOrithmic Language), then Fortran, Pascal, C++, Java, and Python, to name just a few. »This postmodern Tower of Babel reaches from simple operation codes whose linguistic extension is still hardware configuration, passing through an assembler whose extension is this very opcode, up to high-level programming languages whose extension is that very assembler.« [10] (#Babel)

All these languages are based on a binary number system, a sequence of »ons« and »offs« allowing electricity in the circuit to flow or stop. Despite the simplicity of their basic components, programming languages can describe exceedingly complex operations. The computing devices mentioned above all run with binary code, except Babbage’s machines, which used the decimal system. Due to Shannon’s work and the implementation of transistors binary systems became ubiquitous in computing.

In addition to algorithms (see #Algorithm key area #Encoding) and calculations anything decodable can be described by binary code. Perhaps the best-known example is ASCII (American Standard Code for Information Interchange), the characters displayed on a computer screen, which was developed from telegraph code beginning in 1960.

The capacities of current computers may not be sufficient for the amount and complexity of computing in the future. The development of modern computers has been very fast, which is even more striking when compared to the improved performance of cars. If cars made in 1971 had improved at the same rate as computer chips, then by 2015 new models would have had top speeds of about 680 million kilometers per hour. [11]

Quantum computing could be the answer to the recent and seemingly inevitable expansion. In a quantum computer logical operations are performed on an atomic level. Atoms register more than bits, they are able to register 0 and 1 at the same time, and thus quantum bits or qubits are more efficient than classical bits because they can perform two computations simultaneously. [12]

»How long can computation continue in the universe? Current observational evidence suggests that the universe will expand forever. As it expands, the number of ops performed and the number of bits available within the horizon will continue to grow.« [13]

Lívia Nolasco-Rózsás

[1] Raimundus Llullus, »Opera,« 2 vols., Frommann-Holzboog, Stuttgart-Bad Cannstatt, 1996, S. 228–663.

See also Anthony Bonner, »The Art and Logic of Ramon Llull: A User’s Guide,« Brill, Leiden, Boston, 2007.

[2] See Ana H. Maróstica, »»Ars Combinatoria« and Time: Llull, Leibniz and Peirce,« in: »Studia Lulliana,« vol. 32, 1992, pp. 105–134, here p. 111.

[3] See Hermann J. Greve, »Entdeckung der binären Welt,« in: »Herrn von Leibniz’ Rechnung mit Null und Eins,« Siemens Aktiengesellschaft, Berlin, Munich, 1966, pp. 21–31.

[4] L. F. Menabrea, »Sketch of the Analytical Engine Invented by Charles Babbage,« translated from the French and supplemented with notes upon the memoir by Ada Lovelace, printed by Richard and John E. Taylor, London, 1843, p. 696f.

[5] See George Boole, »The Mathematical Analysis of Logic: Being an Essay Towards a Calculus of Deductive Reasoning,« Macmillan, Cambridge, 1847.

[6] See Paul J. Nahin, »The Logician and the Engineer: How George Boole and Claude Shannon Created the Information Age,« Princeton University Press, Princeton (NJ), Oxford, 2013.

[7] Friedrich Kittler, »There Is No Software,« in: »Stanford Literature Review«, vol. 9, no. 1 Spring 1992, pp. 81–90, here p. 88.

[8] See Alan Turing, »On Computable Numbers, with an Application to the Entscheidungsproblem,« in: »Proceedings of the London Mathematical Society«, ser.: 2, vol. 42, no. 1, January 1937, pp. 230–265.

[9] Andrew Hodges, »Alan Turing: The Enigma«, Princeton University Press, Princeton (NJ), Oxford, 2014, p. 177.

[10] Kittler 1992, p. 82.

[11] See Tim Cross: »Vanishing point: The rise of the invisible computer,« in: »The Guardian«, 01/26/2017, available online at: https://www.theguardian.com/technology/2017/jan/26/vanishing-point-rise…, accessed 09/13/2017.

[12] Seth Lloyd, »Programming the Universe: A Quantum Computer Scientist Takes on the Cosmos«, Vintage Books, New York, 2007, pp. 136–139.

[13] Ibid., p. 206.

Brief History of Computer Programming

4:07 min.

CHM (Computer History Museum) Live│The History (and the Future) of Software

69:27 min.

Keynote session: The History of Programming - Mark Rendle [DevCon 2016]

65:25 min.

The Machine that Changed the World (Documentary)

Part I: Giant Brains, 55:41 min. (BBC)

Part II: Inventing The Future, 57:28 min.

Part III: The Paperback Computer, 55:16 min. (NBC Universal)

Part IV: The Thinking Machine, 56:57 min.