Long Short Term Memory

2017

- Title

- Long Short Term Memory

- Year

- 2017

- Copy Number

- 212

- Medium / Material / Technic

- digital prints and text

At the exhibition from September 1, 2018 to June 2, 2019

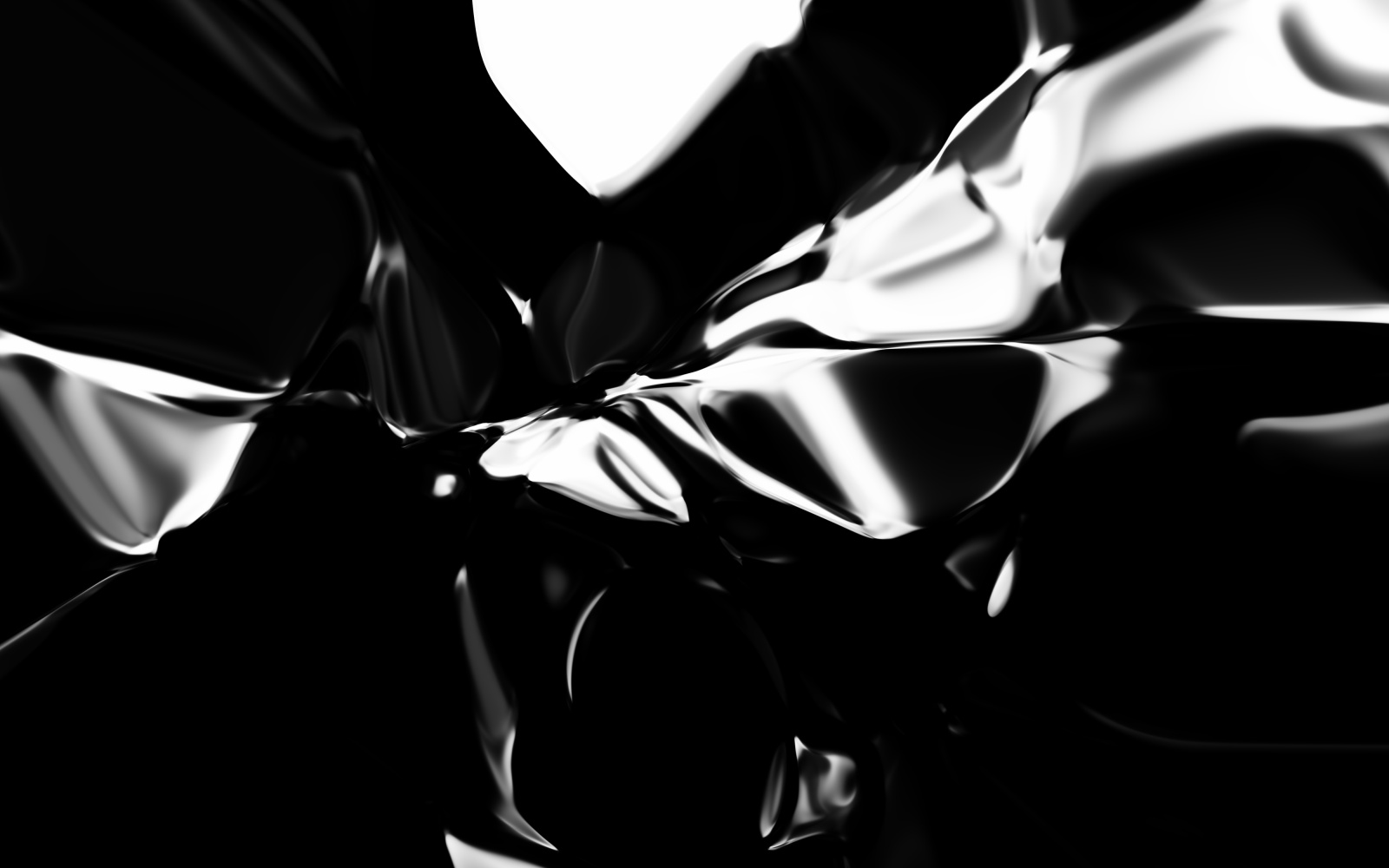

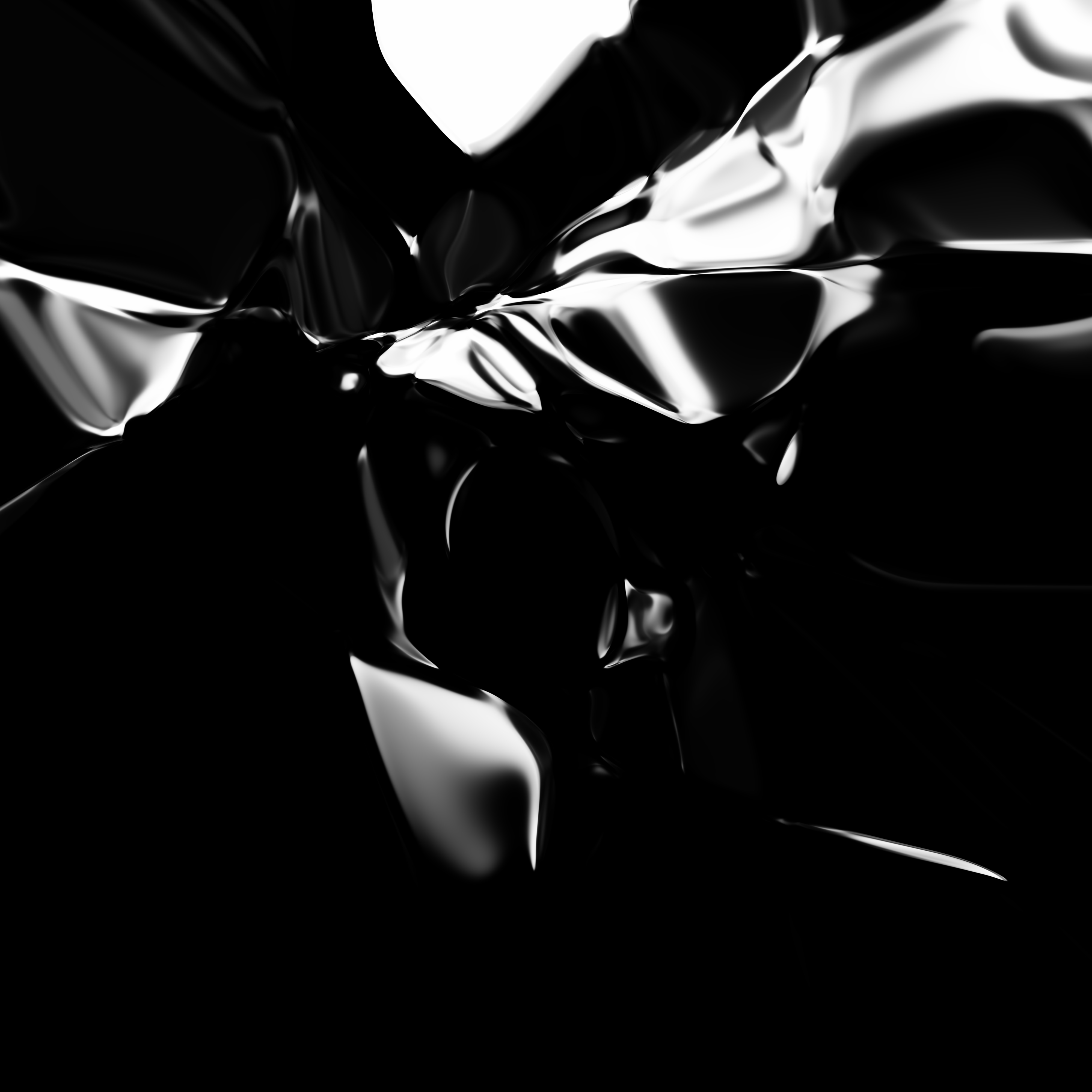

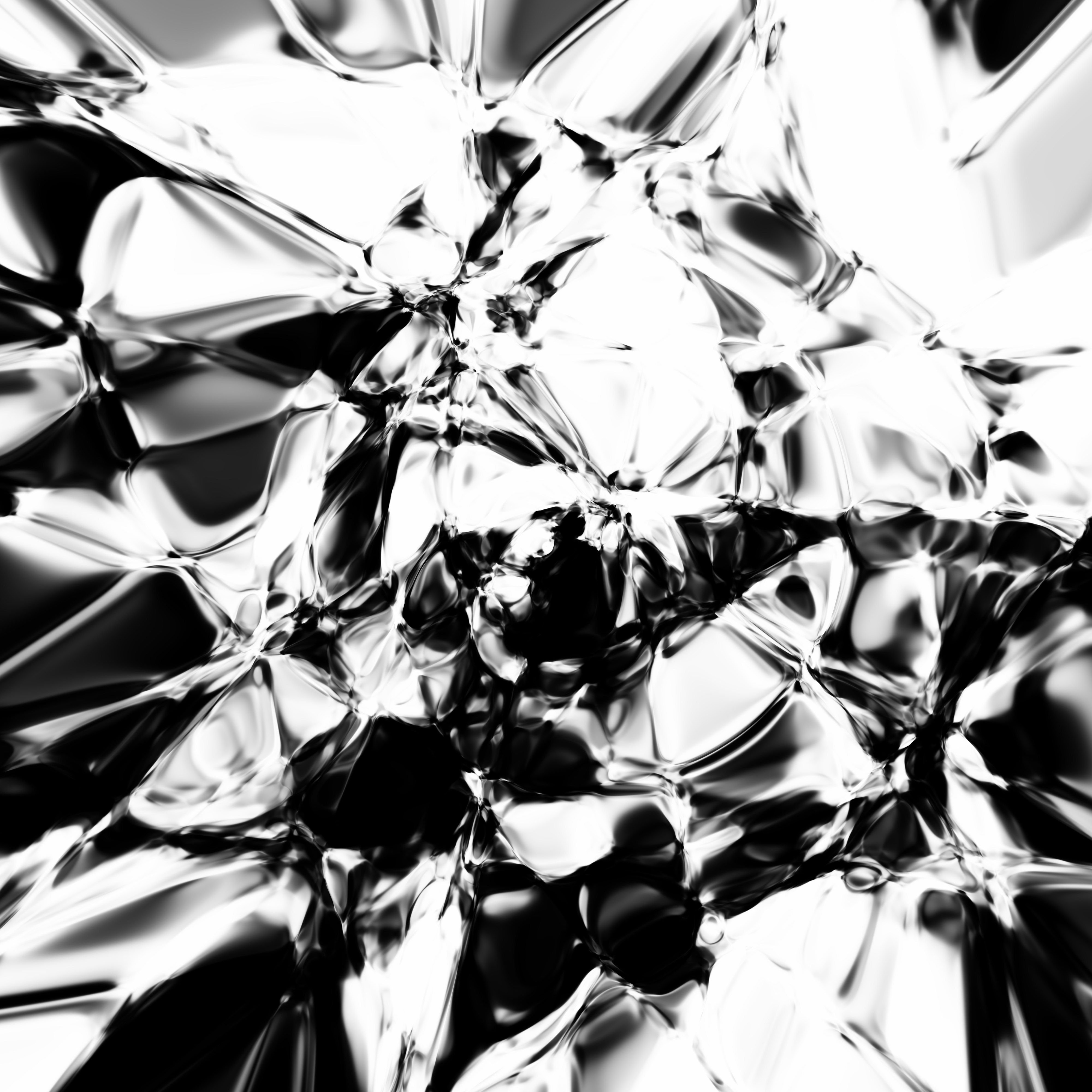

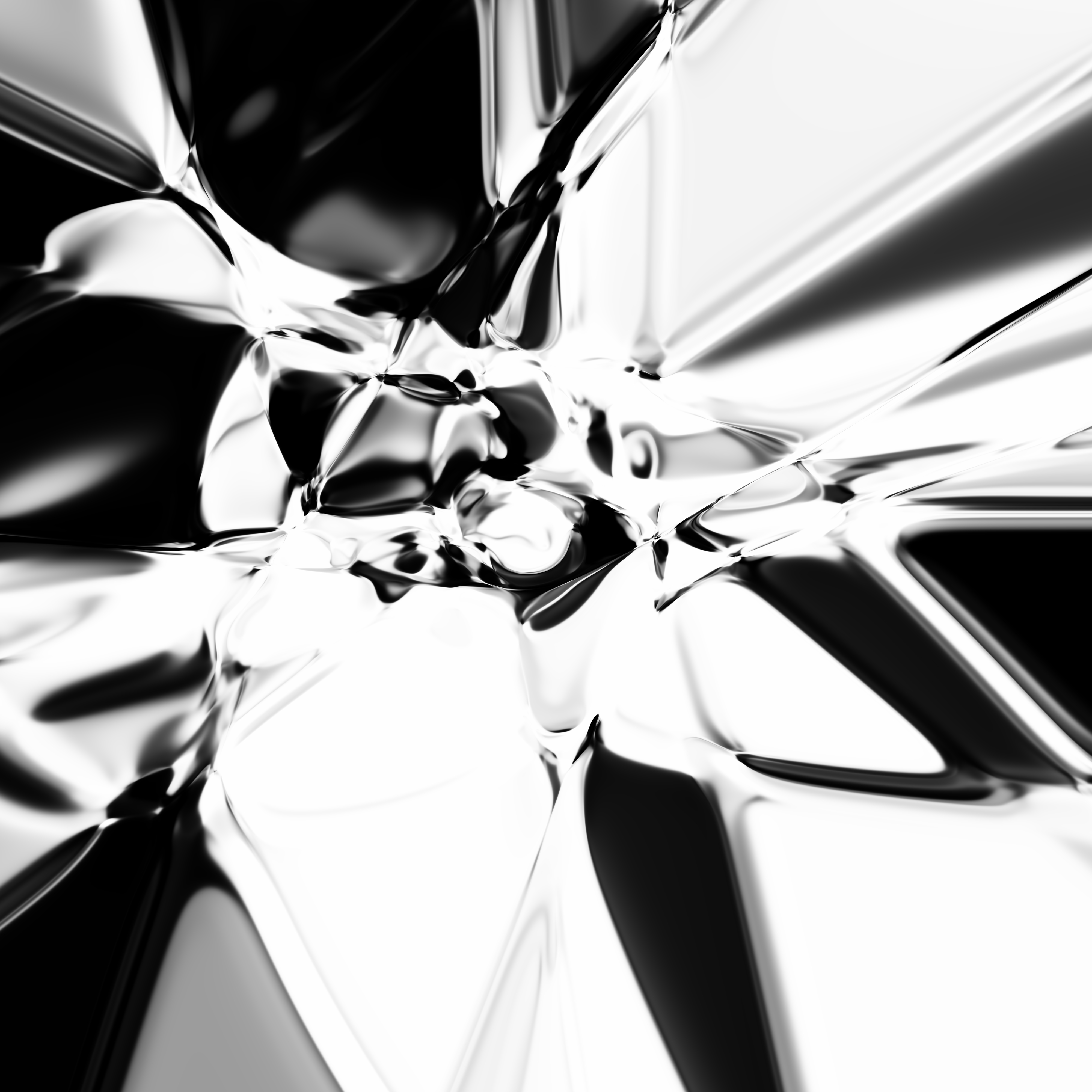

»Long Short Term Memory« comprises texts and images produced by artificial neural networks imbued with memory, exploring architectures for forgetting within the realm of machine learning.

The prints on display are produced by hijacking the latent space of a neural net in an attempt to reveal the structure of the activation functions used in commodified deep learning models. A long short-term memory (LSTM) network is injected with random data adopting a Pareto distribution. This is fed forward through several layers of the untrained network, which outputs values as intensities of light. The output is fed to a second net in the form of an autoencoder, which scales the output to arbitrary sizes, using a lossy representation stored in a distributed manner across its neurons, creating its own artifacts in the process. These »exposures«, which are presented in the exhibition, reveal formal aspects of the network’s architecture.